Why We Aren't Achieving the Copilot Experience in Data Systems

Understanding the Challenges and Exploring Solutions: Data Reconnaissance

👋 Hi! I am CL Kao, CEO of Recce and former version control system, open data, and civic tech nerd. Find me on LinkedIn if you ever want to connect!

Data engineering can be as streamlined and efficient as modern software development with the “copilot” experience - AI-assisted coding tools that enhance productivity. But it’s not there yet today.

A recent GitClear whitepaper also reveals a paradox: GitHub's Copilot, speeds up coding by 55% but may reduce code maintainability, represented by “code churn” metrics in the research.

The insight hinted at reasons why a copilot-like experience is challenging in data engineering and analytics. In this article, we examine the unique challenges of the copilot experience for data systems like dbt, the emerging standard for in-warehouse data transformations, and explore pathways to a more effective copilot experience.

I am using dbt as an example because it is the most pull-request ready component in the modern data systems. This issue extends to traditional data pipelines, modern pipelines like Dagster, and other data/AI systems. Small code changes would lead to downstream data or behavioral changes that are hard to have predefined correctness.

The coding assistant experience

of dbt Labs wrote:Perhaps the most surprising thing about this is that AI seems to be impacting the lives of data professionals more slowly than the lives of software engineers. Even data professionals who primarily spend their days writing code! Software engineers are already seeing significant efficiency uplift via current versions of Github Copilot; can’t data people benefit from the same thing?

My guess is that this is because most application code isn’t as tightly coupled to the underlying data. It more often contains type information. It more reliably has clean abstractions. So an LLM can, with this code, a prompt, and no other context, do useful work. But when is the last time that you were able to build a dbt model without heavily interacting with your underlying data store? I don’t know that I have ever written dbt code without a database terminal in front of me. No one has recreated that process in an LLM-enabled code authoring experience yet.

The current crop of AI coding assistants, including GitHub Copilot, continue.dev, askcodi.ai, and codeer.ai, offer a seamless coding experience for software developers. They enable programmers to stay in the 'flow', reducing the need to consult external references or tools.

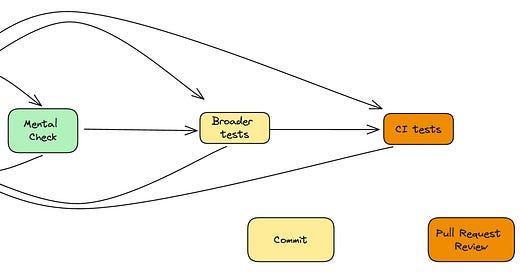

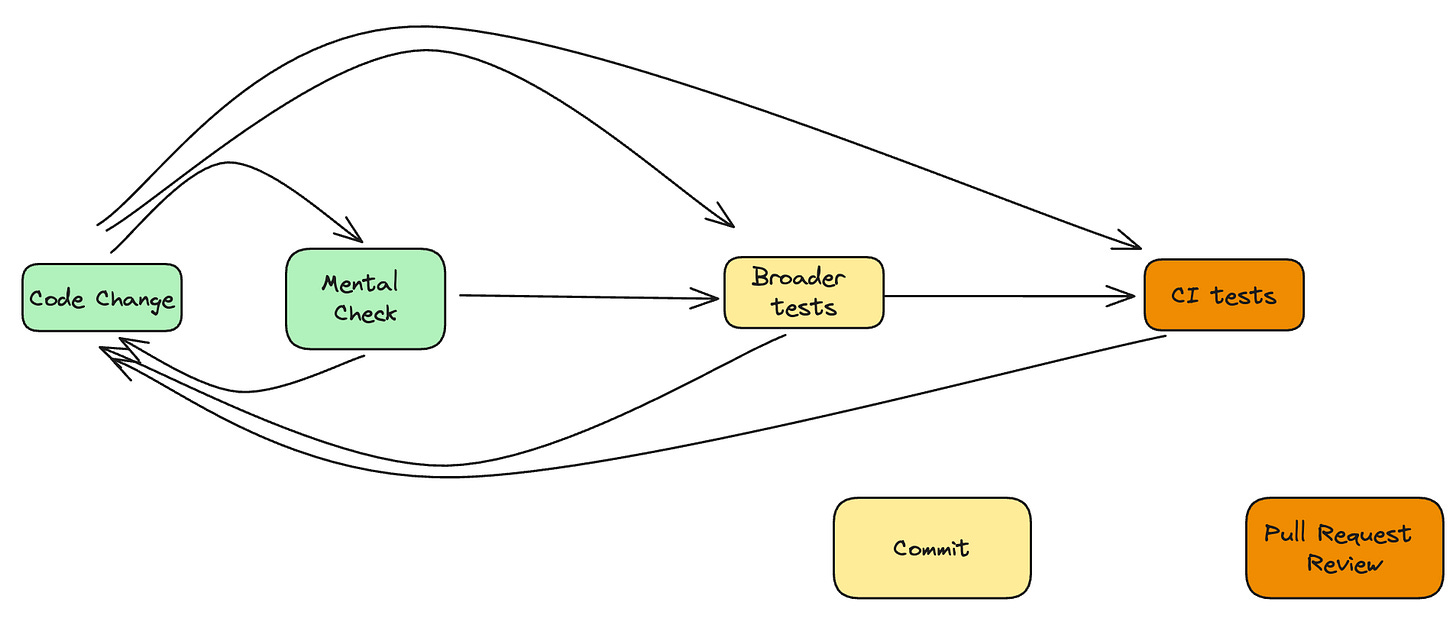

This efficiency is partly due to the nature of software development, where developers usually have the mental model of code correctness. You can run that “mental check” in your head to assess the correctness when presented with code suggestions. You then accept the changes, run real tests, and eventually create a pull request to kick off more tests in CI and for code review.

The concept of a 'scoped-down boundary of correctness' is pivotal. It allows developers to tackle larger problems by breaking them down into smaller verifiable functions. When LLMs understand these boundaries and intermediate errors, they pave the way for autopilot-style experiences like smol-developer.

While coding assistants show promise for software development, we are still far from this when working with data systems like dbt.

The missing bit in data system changes

For data systems like dbt, the scenario is markedly different. Here, correctness is not static but ephemeral, heavily reliant on upstream data semantics.

With data, the question is, how do you know "a change is desirable, or even safe"? Let’s delve into the specific challenges:

Data Preparation and Testing Environment: Data systems need a detailed environment replicating real-world data scenarios, much earlier in the development cycle compared to software development. This used to be prohibitively expensive, but innovations like zero copies in modern data warehouse and open table formats are making this viable.

The Limitations of Tests and CI: While tests and CI are beneficial for code style and data conformance, they don’t fully address the unique needs of data system changes. The cost (of running and maintaining them) is relatively high to their value-add. (You should absolutely have tests and CI, but this partly explains why 60% of dbt projects don’t run tests.)

Reliance on Ad-hoc Verification: In the process of making changes with systems like dbt, there’s often a lot of fiddling and adhoc verification of work-in-progress. tools like dbt-audit-helper and data-diff can compare the resulting data changes, but interpreting whether a data change is desirable still requires human judgment.

In essence, the “mental check” step in data engineering requires a more elaborate verification mechanism against real-world data sets, far earlier than what traditional software changes would require. This breaks engineers’ mental flow, and makes the current generation of coding assistant experience not as useful for data systems.

After analyzing thousands of public dbt pull requests and engaging with seasoned practitioners, we’ve identified a critical component: the representation of proof-of-correctness during the change-making process. Teams like Cal-ITP and mattermost are using free form pull request checklists to ensure the right verifications are performed in the right place, at the right level of details, and that nothing is overlooked.

This kind of proof-of-correctness representation is vital for agreeing on the semantics of changes, and how to verify them.

You can read more about the best current practices of dbt PR checklists here: Use this updated pull request comment template for your dbt data projects.

However the proofs-of-correctness are often presented as query screenshots or links to warehouse query results. They are hard to interact with, difficult to reverify, and may be outdated when ongoing changes are made.

Enter Recce: the data change checklist on steroids

Given these challenges, we took a step back to think about what the ideal tool for data verification might look like..

The result is Recce:

Recce (/ˈrɛki/), short for Data Reconnaissance, is an open source toolkit for dbt users to record proof of correctness as reproducible checks. Ad-hoc queries or spot checks during development can now be effectively used to communicate the expectations of a change, and speed up the review process. This increases velocity without losing confidence.

Here’s an example pull request for correcting customer lifetime value definition in the jaffle-shop repo. Starting with the lineage diff, the author attached Recce checks as proof-of-correctness and walked the PR reviewer through the logic and intention of verifications.

Recce checks are different from general tests because they are specific to the context of changes being made. They are also:

flexible representations of acceptance of schema changes, interpretation of profile statistics changes, interpretation of row-by-row value changes, interpretation of downstream impacts, or even communication with stakeholders.

tailored to the level of scrutiny required by impact risks, so high signal over noise

live and can be reproduced when you make further changes.

This fills the gap beyond traditional dbt tests or other unit test solutions, to semantically represent how we expect data system changes to be verified.

We’ve released Recce as an open source CLI tool for the dbt code change and review process, available on github. We'd love to hear your feedback to see how this may help your current dbt workflow.

We are hard at work on a cloud experience that further helps teams to collaborate by allowing all stakeholders to interact with the dynamic checklist. If you want to hear more about Recce Cloud, subscribe to the newsletter.

I believe this technique applies to any logic change to a complex data system, and that tools like Recce are how we can build a true copilot experience for complex data systems. In a future article, we'll share our plans for using agents to making changes to data system by planning proofs-of-correctness and collaborating with humans.

Conclusion

While still in their infancy, coding assistants have proven valuable for software developer velocity. To make them work for data, we’ve identified a key gap: the structural representation of proof-of-correctness. Recce is the open source toolkit we are working on to address this issue.

Recce provides a layer of agreement on the semantics of changes, and how to verify them. While Recce provides tools that are incredibly valuable to human-to-human collaboration, the same tools will make it much easier for AI assistants to interact with your data systems.

We invite you to explore how Recce can help with your dbt workflow, and to share in the comments about how you are currently verifying your changes to data systems. Join us on the journey to make data productive! Give us a star, play with it on your dbt projects, and feel free to get in touch on LinkedIn.